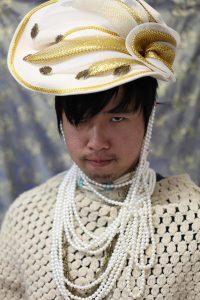

Robert Yang

Robert Yang

Adjunct Faculty

Art, Media & Technology : Building Worlds

Panel:

10:30 am to 11:30 am

Beyond the Classroom: How do we teach VR?

VR/AR/MR in an arts and humanities design school and university

About:

Robert Yang is an indie game developer and part-time academic in New York City. He regularly teaches games at NYU Game Center at New York University, IDM at NYU Tandon School of Engineering, and MFADT at Parsons School of Design. He has given talks about games at GDC, IndieCade, Queerness and Games Conference, and Games for Change. He holds a BA in English Literature from UC Berkeley, and an MFA in Design and Technology from Parsons School of Design.

Reflective Essay:

First of all, we have to acknowledge that universities are skeptical of investing in VR. Getting money and resources to do VR research can feel like pulling hair, and I can’t blame the academy — VR is not proven, and VR may certainly “fail” to catch on or happen, just like it did in the 1990s. Nonetheless, we must prepare for the possibility that it *may* succeed, given how much money is being poured into it, and VR might become a pervasive technology that changes society as we know it. Now as someone who has taught 3 semesters of VR courses in Parsons and NYU, I think teaching virtual reality requires 3 “pillars”: lived experience, theoretical context, and technical training.

By “lived experience” I mean actually using VR headsets first-hand and knowing what it feels like. Imagine someone trying to design for a touchscreen phone without having ever used a touchscreen phone. So much of using VR goes beyond the optics and haptics, it’s also about the social context, how the technology actually fits into someone’s life and situation. It’s worth noting here that modern VR is made of two totally new-to-the-public interfaces: a headset and a motion controller. Not only do we have to figure out one new interface, we have to figure out both at once, and how they relate to each other. It’s a lot to take in, and the only way to understand it is to use it for hours and hours. You can’t read about VR to know what it’s like, you can’t even watch someone, you have to experience it yourself.

By “theoretical context” I mean the history of VR, and the body of critical VR theory developed by academics in the 1990s. Today, the technology is finally “catching up” to the theory, and we’re able to actually test a lot of theories of embodiment and presence… turns out, some of it was wrong. Today’s new generation of technologists and theorists must “re-discover” the theory that informs our current rhetoric and conversation surrounding VR, and critically re-examine it. VR theorists of the 1990s did not witness the rise of social media, mobile computing, and the 2016 election. We have to fix the theory and update how we talk about VR. Like, can we finally kill the dream of the holodeck? No one wants a holodeck.

By “technical training” I mean the code and know-how required to build VR experiences. There is a huge skill barrier where the ideal VR designer needs to know programming, 3D graphics, and user interaction design. At Parsons we do our best to impart this interdisciplinary training, but the fact is that each of those domains is a separate 4 year degree. Now, people are developing lots of simplified tools for working in VR to lower this barrier to entry, but as a university, we must not force students to become reliant on these “baby VR” tools, we have to teach the industry standard so they can have independent careers in media. I’m extremely relieved we’re developing a whole Immersive Storytelling program now, because I’m kind of tired of trying to fit years of material into one semester.