So, a few updates related. First of all, it seems the LCCS kids were creating the assets and ideating for a VR environment. While a Dome technically could constitute as a virtual environment, people still would be free to walk around and interact with it all, so in the end, this will be a totally different, better, although more complex to address experience.

There seems to be more assets such as creatures and the environment that the kids also wanted to upload and design for. The script was also originally created as a very dynamic shift of scenes, which will totally not work for a projected environment. From my experience with a large-scale projection mapping is, that the scene cannot move too fast, otherwise it will just be a blur, which will make the audience nauseous. It’s a bit of a similar issue with vr, hence there is often limited movement associated with vr games, rather just things being thrown at the player to interact with. It might be different with a projection mapping thrown within a dome-environment, however even in such case I’d foresee the latency to be just simply too high, again, too fast objects in the scene, I’d say will likely just not work well (if they can’t design right IPS monitors and even mine lacks behind, I’d be surprised if they did a better jobs with projectors).

Interaction is required of sorts, the children want some to be embedded, although there is some ambiguity in relation to what the interactions would actually be.

I see that the projects of Blussion and Oceanic Odyssey are very alike, although a few features vary greatly. Based on the email follow-up, it seems these two projects may in the end turn up to be different. Hosting two projects within a dome shouldn’t be too much of an issue, for instance it could be a changing scene over a fixed time, from Odyssey to Blussion. If these two were together incorporated, I’d say the effects showcased in Blussion could be achieved within Unity by messing with shaders. I have some basic knowledge on this (solid workshop led by Justin on a Friday before spring break!)

Another way these two projects could potentially be mixed is the incorporation of some other technologies. For instance, I thought of perhaps including infrared/ultraviolet light throwing devices, potentially costly, that could be only seen with the right, well, glasses. I think however, making the experience as seamless as possible would be preferable, therefore without the need for glasses. Also, synchronising Unity with external devices, although not impossible, could prove harder than expected.

For Unity, I pitched in a few other ideas:

- For skybox (environment ‘sky’), it would be nice to have like a 360 footage/image from within Hudson River (it works, I used some of my own 360 images)

- Kinect could be used for introducing interaction between people within the Dome and Unity, although Kinect does get a bit wicked with many people within the scene present

- Physical sensors could be an idea, although as the dome would likely be large, connecting all of them to, let’s say, an arduino, just the simple setup and probably the need to deal with physics messing with appropriate and effective WYSIWYG concept, likely would just not work seamlessly in long-term (lots of people present around anything does activate murphy’s law in no-time)

- VR Controllers are still an option, although letting many different people interact with the Dome would be cool and imho preferable

- One of the potentially easiest way would be to use, paradoxically, a simple webcam from above that would register people via OpenCV and even a simple processing application, that would detect position of people, depending on which, some stuff could happen, even the addition of plastic in the environment and getting it removed, which kinda blends in the polluters field game concept

- Although I didn’t voice this, I feel that involving any sort of UI in a 360 environment is a bad idea, I feel creating a Dome for projecting 360 environments are primarily to create a compelling, immersive environment. UI just redirects the attention to itself, the game’s rules, something that people will not likely learn in a few minutes even

Other ideas were voiced by others:

- Sketched animation of the environment, therefore an experience without much or any interaction (I just expressed that this will be tons of work)

- Simplifying the experience to not contain interaction

Also, a few other things about other projects:

- Family Feud (Trash Trivia) needs a screen

- And a program to visualise something on it

- There was stuff on github recommended during the class

- I’d say, I’d get this up and running with a simple application in processing as an option but a Wizard of Oz needs to be present for revealing, well, the answers

- And a program to visualise something on it

But also as mentioned during the lecture, all of this is likely doable in many different ways. It’s kinda a cool age we’re living in, potentially anything can be created in any way and in so many elaborate ways, or simple ones too, which are cool or even cooler (if you think about it, evolution, it’s kinda simple how we all arrived as a species where we are, despite that the end product is, well, as elaborate as it gets; (obviously depends on philosophical predisposed beliefs of each individual))

That’s all for now.

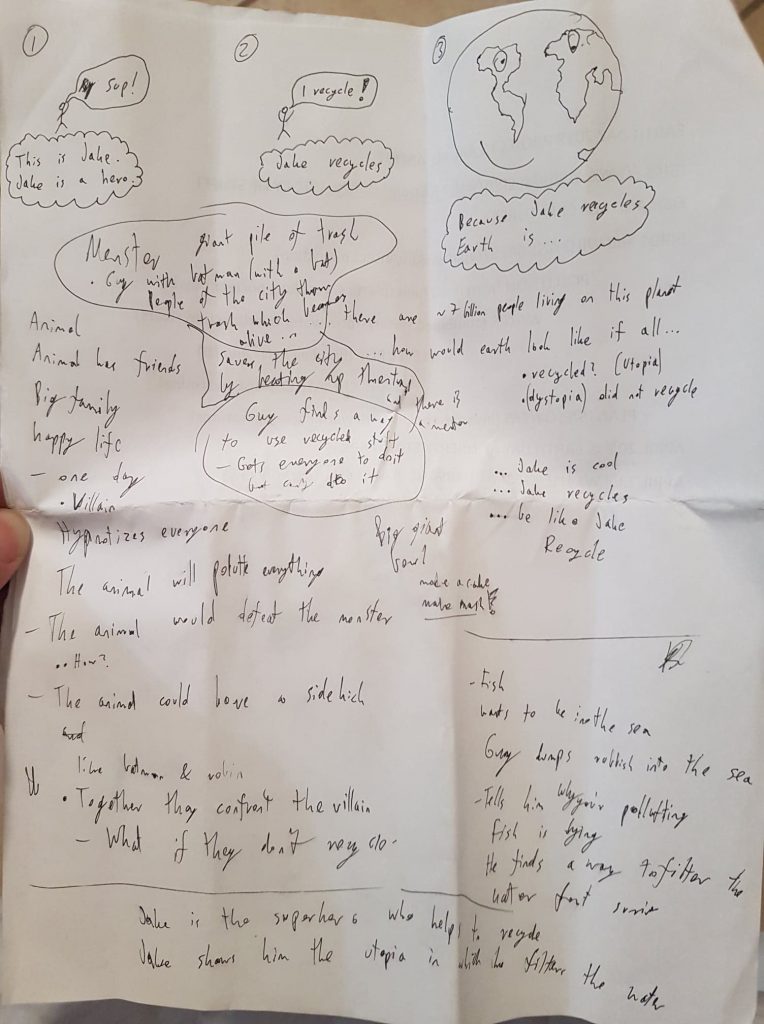

EDIT: I found the notes I made from one of the last LCCS trips, I hope my handwriting is legible to others 🙂

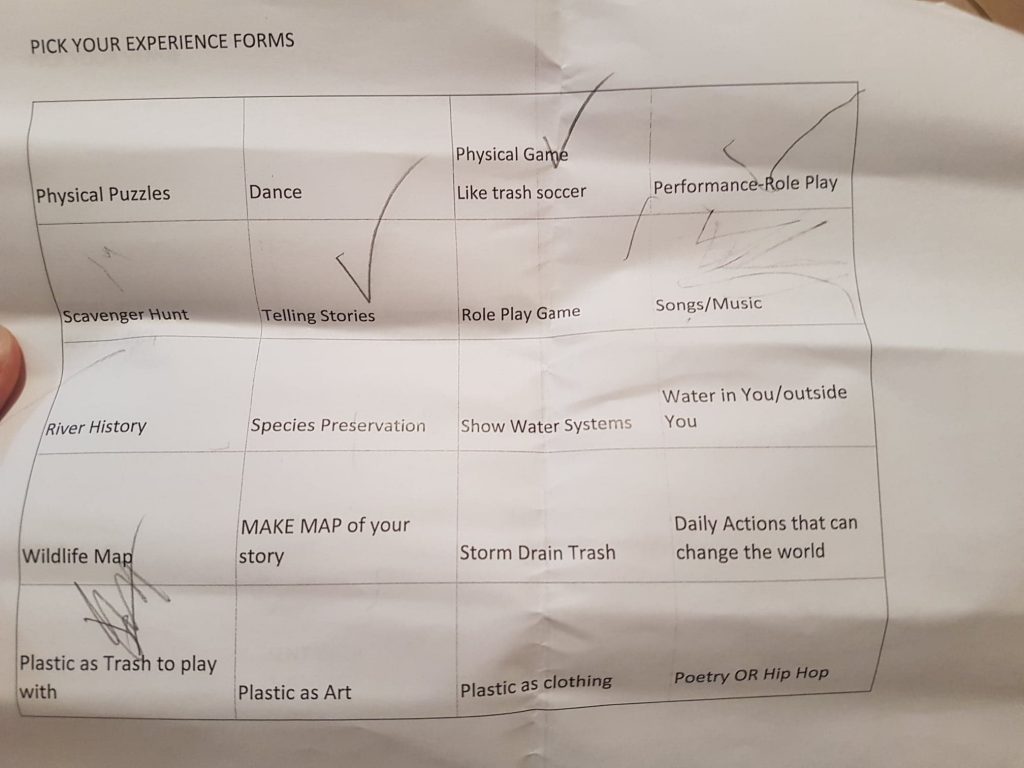

One of the Trash Trivia members filled this sheet: